The unusual case of needing to slow folks down

Organization

Sourcegraph

Role

Staff Product Designer

Date

2022

As a product designer, I often wish that designers would share more of the “small stories.” Many of the efforts and projects that we tackle on a daily basis aren’t big or flashy things that make for impressive case studies—and yet, it’s these smaller efforts that build up over time to create thoughtful, intuitive products.

While working on Sourcegraph Cloud—which involved (turning a single-tenant, self-hosted product into a multitenant, cloud-based product)—my team was solving the problem of making it effortless for organization admins to connect their organization to their code hosts, so that they could choose repositories to sync with Sourcegraph.

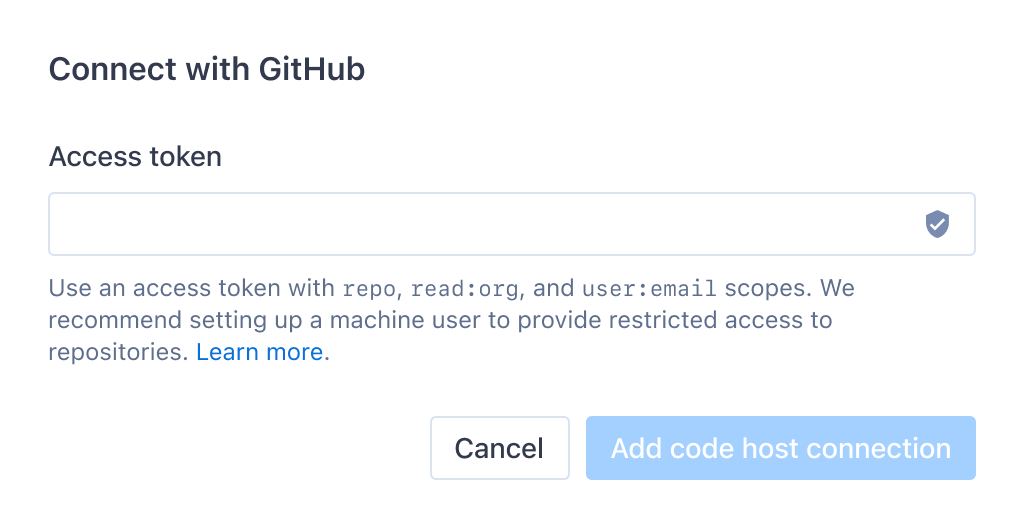

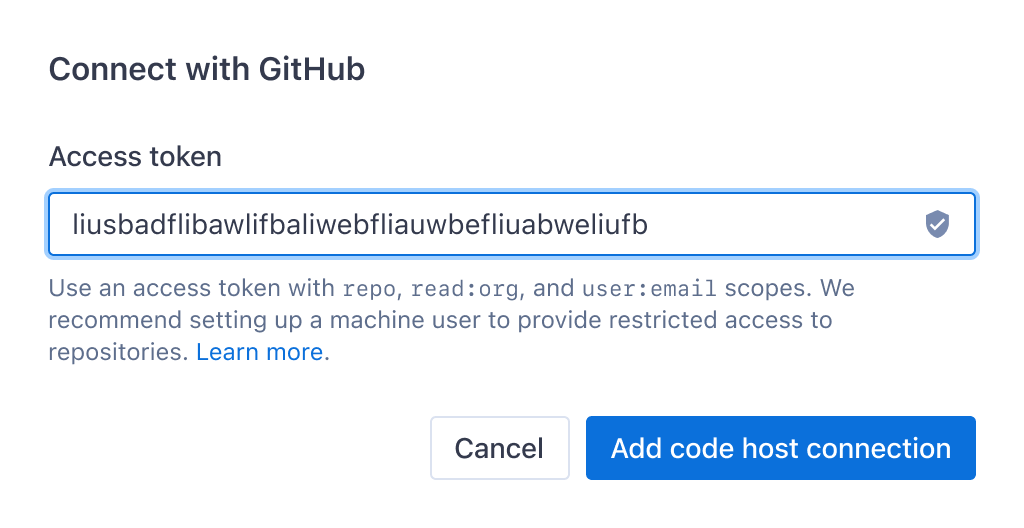

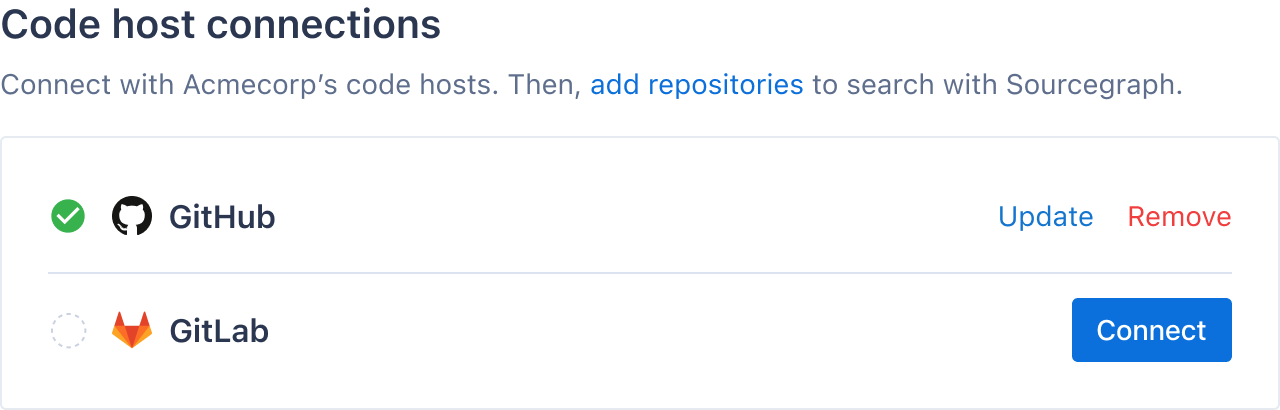

Our earliest iteration of this feature was limited to connecting with GitHub.com and GitLab.com. The simplest and most effective first version used access tokens from these code hosts to establish code host connections. After connecting a code host, organization admins would be able to view a list of all repositories on that code host, and choose which ones to sync with Sourcegraph.

We had previously designed a restrictive privacy model for code visibility on Sourcegraph Cloud. In short: a user would only be able to view an organization’s private code on Sourcegraph if they were both a member of that organization on Sourcegraph, and if they had access to that repository on the code host. However, one of our other early constraints was that we didn’t have role-based access control for organizations, and did not plan to implement this for quite some time.

This meant that in the earliest iteration of private code for organizations in Sourcegraph Cloud, any member of an organization could view the list of repositories available to be synced to Sourcegraph—even if they didn't have access to the code itself.

As a team, we considered this an acceptable tradeoff, recommending that organizations set up machine users (“fake users” on the code host with access only to a pre-defined set of repositories), and generate access tokens to use with Sourcegraph via that machine user’s account.

However, we quickly learned in user testing and while working with early access customers that the folks setting up an organization wouldn’t necessarily reflect on or consider this recommendation, for a variety of reasons. Most often, they would simply set up the code host connection using their own personal access token.

This created a situation where—although private code would never be shown to someone who shouldn't see it—the names of all of that user’s private repositories would be exposed to all members of their organization.

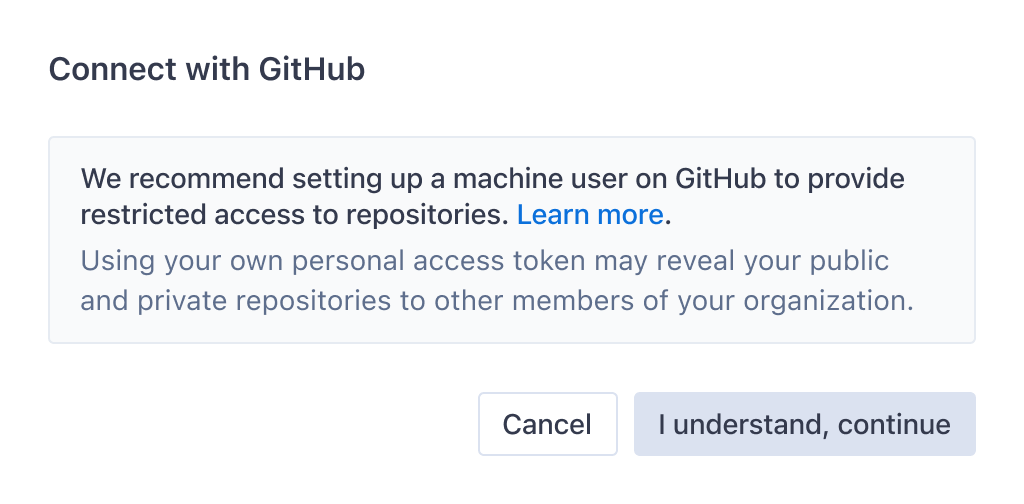

In discussions with the early access customers, we learned they didn’t feel this was an issue. However, in the absence of role-based access control, we felt that we had an obligation to make sure that users were making an informed choice when they chose to use their own access token instead of that of a machine user.

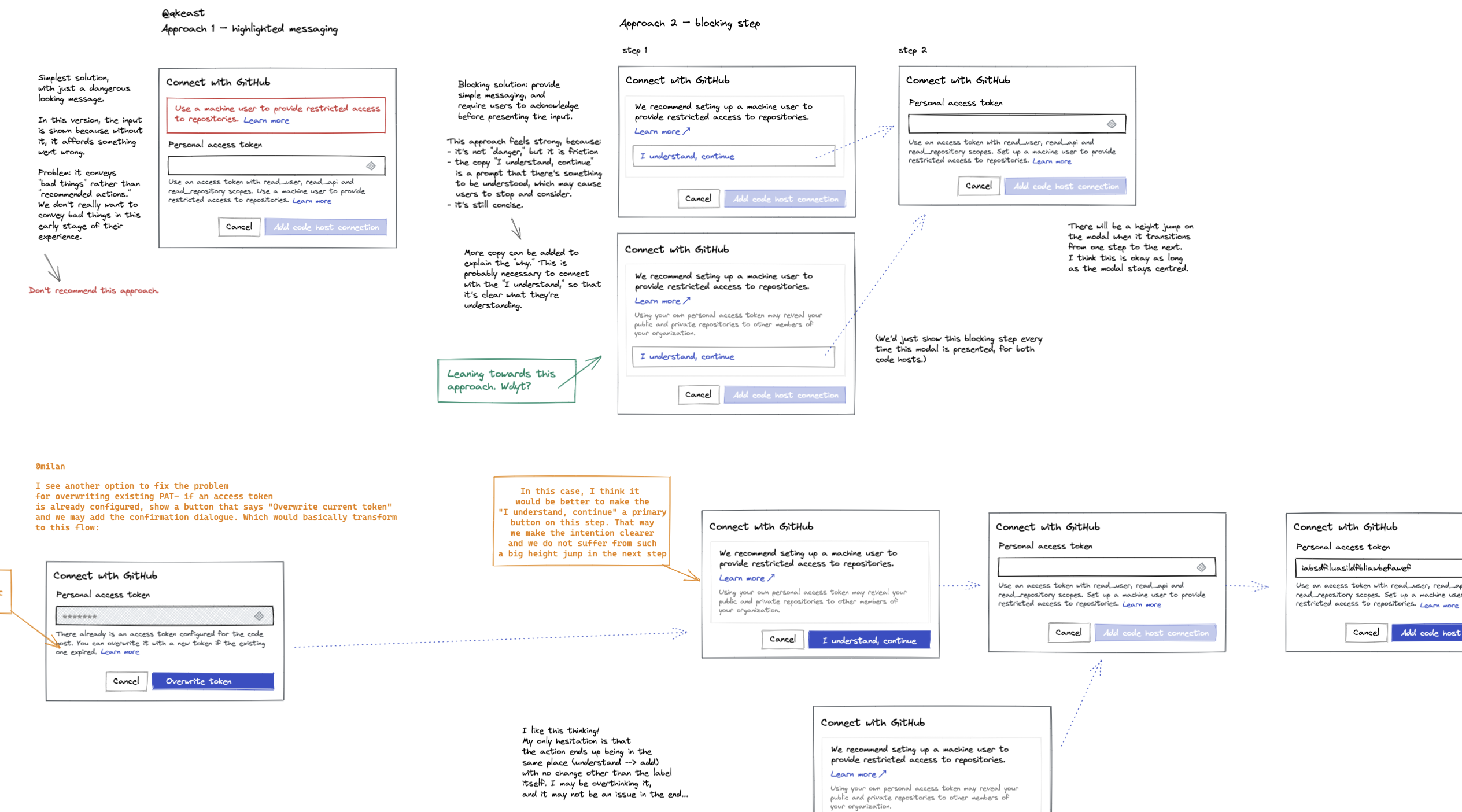

At Sourcegraph, we worked async-first and globally distributed as a remote team. I captured this problem in a one-page design challenge to quickly align the team around the problem and.

The team agreed that this was a real problem that we should solve. To keep the effort concise and efficient, I collaborated with a developer in some low-fidelity design exploration around how we might solve this, and aligned together on the approach.

The existing design system made it easy to quickly define and document the high-fidelity implementation details, and we were able to ship this improvement in almost no time at all.

In subsequent hands-on early access customer sessions, every single customer stopped, considered, and commented on the recommendation to use a machine token. Some users decided to proceed with their own access token, acknowledging the risk, while others proceeded to set up a machine user.

In the end, the result of this effort will never be connected to revenue or performance outcomes, and we will never be able to say we explicitly prevented a security incident. And yet, this was a satisfying outcome: it was quick, efficient, and felt like the right thing to do, aligned with the team’s product design principles.